Accurate mapping of Brazil nut trees (Bertholletia excelsa) in Amazonian forests using WorldView-3 satellite images and convolutional neural networks

Matheus P. Ferreira, Rodolfo G. Lotte, Francisco V. D’Elia, Christos Stamatopoulos, Do-Hyung Kim, Maria Beatriz N. Ribeiro, and Adam R. Benjamin

Abstract

Contextualization

The Brazil nut is one of the most important non-timber forest products in South America (Peres et al., 2003). It is extracted from Bertholletia excelsa Bonpl. (Lecythidaceae) trees that grow and bear fruit almost exclusively in natural forests rather than in plantations. Brazil nuts are an essential dietary item for local populations in Amazonia and are also traded in considerable volumes. In 2019, Brazil produced 32.9 thousand tons of Brazil nuts, which represented 11.1% of the economic profit generated by exploring natural plant resources (IBGE, 2019). In addition to the nuts’ economic importance, the wood of B. excelsa trees has a high value in the market, which motivates illegal logging activities, given that the species is considered threatened by the Brazilian Ministry of Environment (MMA, 2019).

Recently, convolutional neural networks (CNNs), a type of deep learning method, have been hailed as a promising approach for identifying tree species in remote sensing images, especially in very-high-resolution (VHR) data (Kattenborn et al., 2021). CNNs are designed to automatically extract spatial patterns (e.g., shapes, edges, texture) of images using a set of convolution and pooling operations (Zhang et al., 2016), hence learning object-specific characteristics. In the case of tree species, such characteristics are mainly related to the canopy structure: the arrangement of leaves and branches in the crown or color patterns caused by flowering events. CNNs designed for semantic segmentation can simultaneously classify and detect individual tree crowns (ITCs), thus avoiding object-based approaches that require an ITC delineation step before classification. ITC delineation is a challenging task, particularly in tropical forests in which the tree crowns usually overlap and have highly variable sizes and shapes (Tochon et al., 2015; Wagner et al., 2018; G Braga et al., 2020).

This study seeks to contribute knowledge regarding the potential of CNNs to map B. excelsa trees in Amazonian forests using WorldView-3 satellite images. We bring new insights into model training strategies and backbones for feature extraction. We presented an approach that can map the spatial distribution of individual trees and groves of B. excelsa in large tracts of Amazonian forests.

Materials

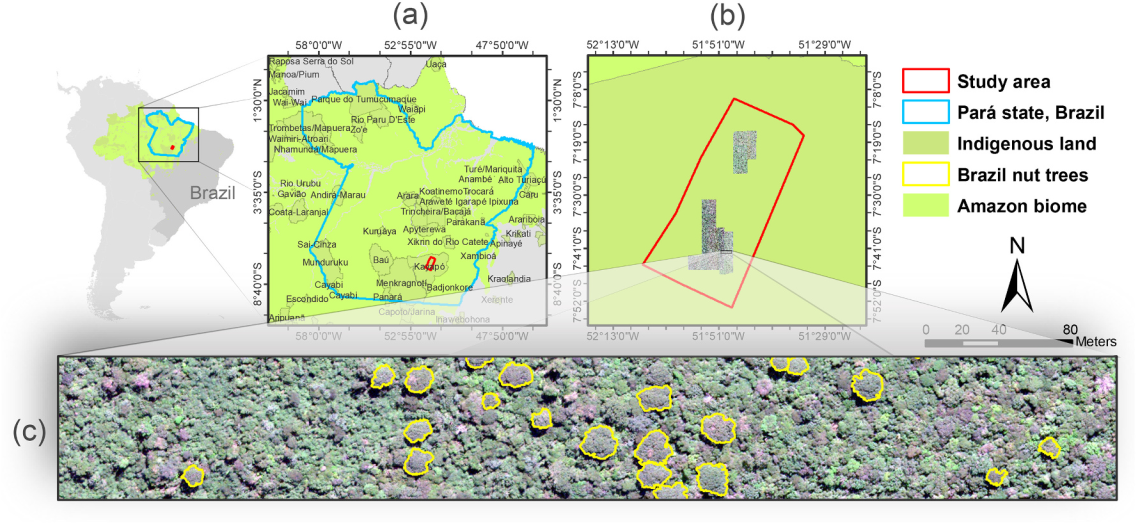

The study area is located in southern Pará State, southeastern Brazilian Amazon, within the Kayapó indigenous land (Fig. 1). The Kayapó territory, a regularized area of 3,284,005 ha, harbors more than 8580 indigenous people (IBGE, 2010) from several ethnicities or subgroups at the eastern part of the Amazon biome. Given that the Xingu River crosses the territory and other numerous small streams run through the inner forest, there are estimated to be 19 known indigenous communities and more than five isolated ones (Ricardo et al., 2000).

Cloud-free WorldView-3 images were acquired over the area of interest on 19 June 2016, at a maximum off-nadir view angle of 16.07◦, encompassing an area of about 1071 km 2 (see images within the red polygon of Figure below)

Location of the study area in Brazil. (a) Pará state and indigenous territories. (b) Study area located within the Kayapó reserve. (c) True color composition of a WorldView-3 scene (pixel size = 30 cm) showing individual tree crowns (yellow polygons) of Brazil nut trees (Bertholletia excelsa)

Location of the study area in Brazil. (a) Pará state and indigenous territories. (b) Study area located within the Kayapó reserve. (c) True color composition of a WorldView-3 scene (pixel size = 30 cm) showing individual tree crowns (yellow polygons) of Brazil nut trees (Bertholletia excelsa)

Methodology

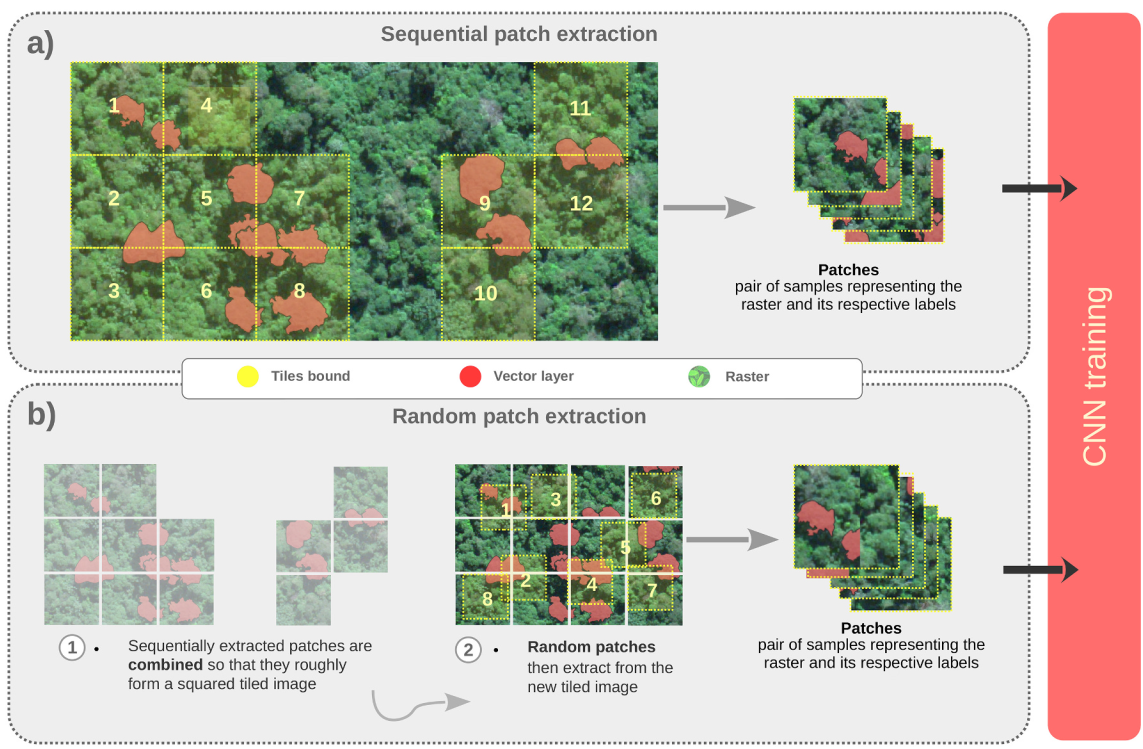

Illustration of two approaches used to train the convolutional neural network (CNN) model. (a) Sequential patch extraction. The patches are sequentially extracted from the WorldView-3 image based on the manually delineated individual tree crowns’ boundaries. These patches are then used to train a CNN model. (b) Random patch extraction. The sequentially extracted patches are combined so that they roughly form a squared tiled image, and, from this image, patches are randomly extracted

Illustration of two approaches used to train the convolutional neural network (CNN) model. (a) Sequential patch extraction. The patches are sequentially extracted from the WorldView-3 image based on the manually delineated individual tree crowns’ boundaries. These patches are then used to train a CNN model. (b) Random patch extraction. The sequentially extracted patches are combined so that they roughly form a squared tiled image, and, from this image, patches are randomly extracted

Results

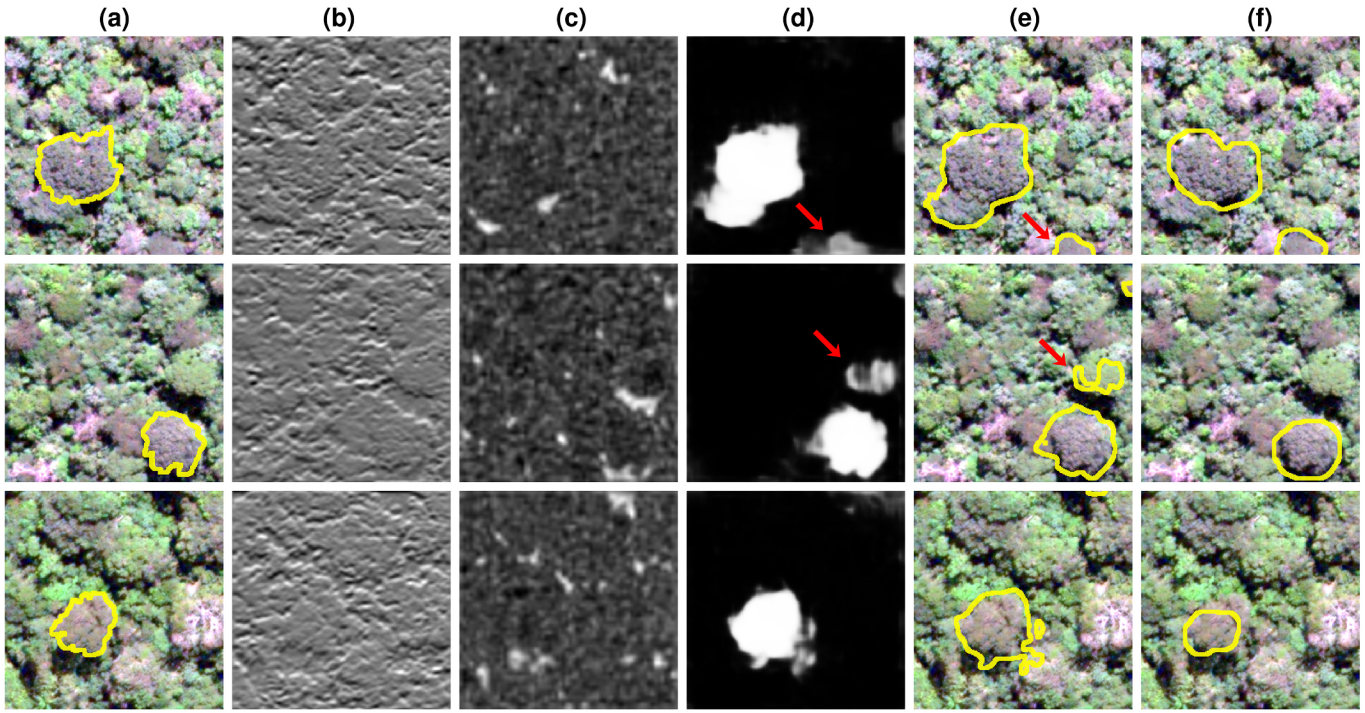

Illustration of the semantic segmentation process with DeepLabv3+ architecture and ResNet-18 as backbone network. The input image patches of 256 × 256 pixels are passed through convolutional and rectified linear unit layers that learn features from Brazil nut trees (Bertholletia excelsa). These features are used to distinguish them from other types of trees. (a) Ground truth individual tree crowns of B. excelsa overlaid to a true color composition of the WorldView-3 image (pixel size = 30 cm); (b) Strongest activation channel of the first convolutional layer. Note that this channel activates on edges; (c) Strongest activation channel of an intermediate layer that activates on shaded portions of the input image; (d) Activation of the penultimate layer of the network (before the softmax layer) showing strong activations in areas of B. excelsa occurrence; (e) Semantic segmentation results provided by the network and (f) Individual trees detected by using the method proposed by Ferreira et al. (2020). The red arrows in (d) and (e) point to areas segmented by the network that did not coincide with the ground truth.

Illustration of the semantic segmentation process with DeepLabv3+ architecture and ResNet-18 as backbone network. The input image patches of 256 × 256 pixels are passed through convolutional and rectified linear unit layers that learn features from Brazil nut trees (Bertholletia excelsa). These features are used to distinguish them from other types of trees. (a) Ground truth individual tree crowns of B. excelsa overlaid to a true color composition of the WorldView-3 image (pixel size = 30 cm); (b) Strongest activation channel of the first convolutional layer. Note that this channel activates on edges; (c) Strongest activation channel of an intermediate layer that activates on shaded portions of the input image; (d) Activation of the penultimate layer of the network (before the softmax layer) showing strong activations in areas of B. excelsa occurrence; (e) Semantic segmentation results provided by the network and (f) Individual trees detected by using the method proposed by Ferreira et al. (2020). The red arrows in (d) and (e) point to areas segmented by the network that did not coincide with the ground truth.

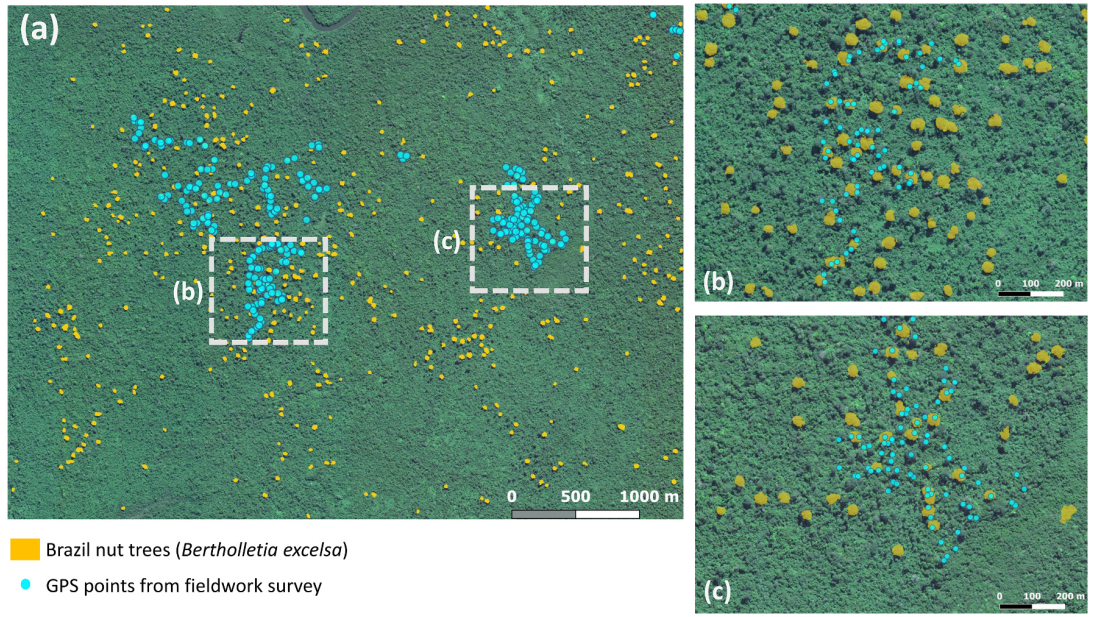

Individual tree crowns of Brazil nut trees (Bertholletia excelsa) mapped over Amazonian forests. (a) True color composition of a WorldView-3 scene (pixel size=30 cm) overlaid with automatically detected ITCs of Brazil nut trees (yellow polygons) and GNSS information acquired in the field (cyan points). (b) and (c) show two areas of B. excelsa occurrence visited in the field.

Individual tree crowns of Brazil nut trees (Bertholletia excelsa) mapped over Amazonian forests. (a) True color composition of a WorldView-3 scene (pixel size=30 cm) overlaid with automatically detected ITCs of Brazil nut trees (yellow polygons) and GNSS information acquired in the field (cyan points). (b) and (c) show two areas of B. excelsa occurrence visited in the field.

Final considerations

Our study shows the potential of CNNs and WorldView-3 images to map B. excelsa trees in Amazonian forests. We propose a new model training strategy capable of achieving a high producer’s accuracy (>97%) with a reduced set of training patches. We confirm the effectiveness of the DeepLabv3+ architecture with the ResNet-18 as a backbone network for feature extraction. We show that the shadow of emergent B. excelsa trees is a crucial discriminative feature. The off-nadir imaging capabilities of WorldView-3 can affect the presence of shadows by distorting the image. Thus, it is essential to consider the off-nadir viewing angle of WorldView-3 images for mapping of B. excelsa and other emergent trees. Individual trees and groves of B. excelsa can be mapped accurately over large tracts of Amazonian forests. This helps forest managers, ecologists, and indigenous populations to conserve and manage this important tree species.

Cite this paper

@article{ferreira2021accurate,

title={Accurate mapping of Brazil nut trees (Bertholletia excelsa) in Amazonian forests using WorldView-3 satellite images and convolutional neural networks},

author={Ferreira, Matheus Pinheiro and Lotte, Rodolfo Georjute and D'Elia, Francisco V and Stamatopoulos, Christos and Kim, Do-Hyung and Benjamin, Adam R},

journal={Ecological Informatics},

volume={63},

pages={101302},

year={2021},

publisher={Elsevier}

}